The first prompt I typed into DALL-E 2 was “Cubist painting of a tuxedo cat playing piano.” The AI tinkered and buffered for a minute and then delivered four absolutely adorable images of cats playing piano. The surprise, whimsy, and color impressed me — after all, many of us have seen some of the more disturbing results of AI-generated images.

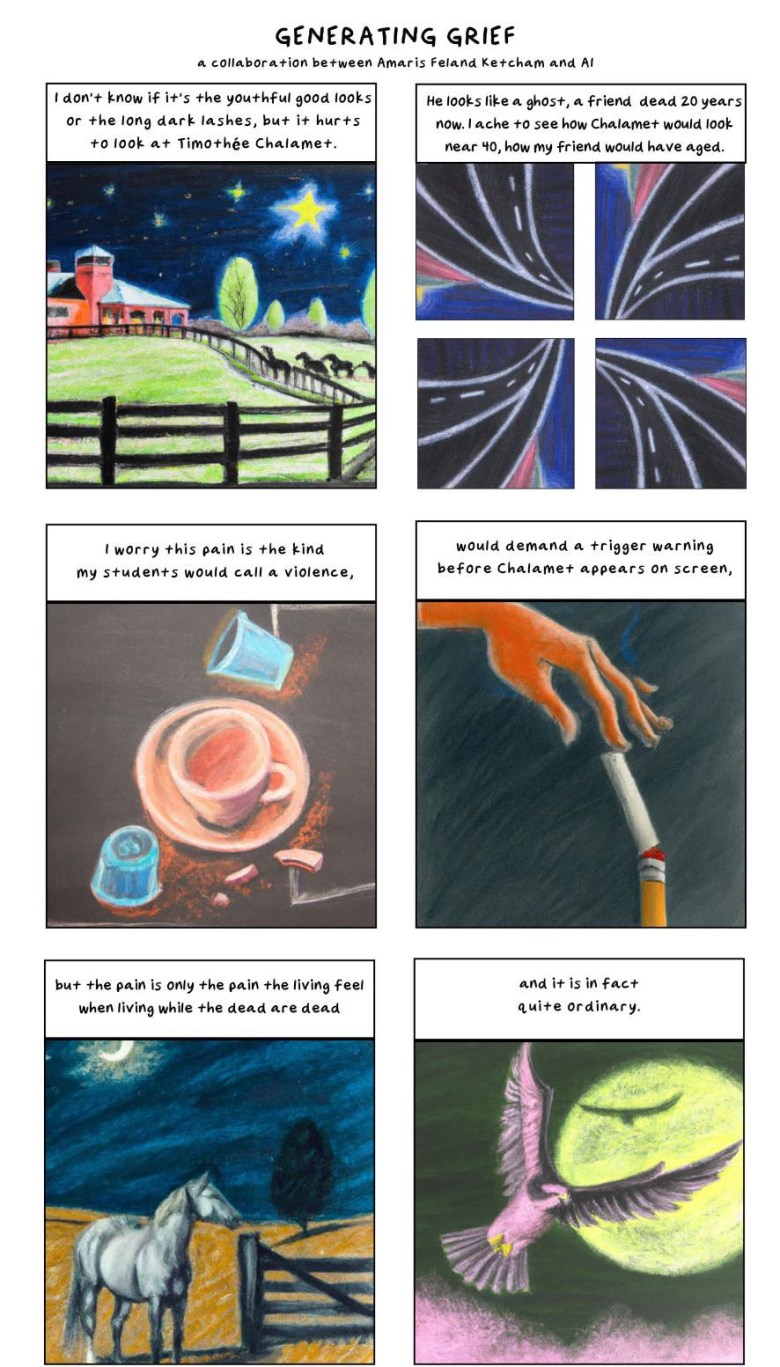

I’ve been teaching and writing and dabbling with comics for a couple of years now, so it seemed natural to experiment with DALL-E 2 to make a text-image collaboration. Could DALL-E 2 and I develop a shared, unique “voice” in our creative output?

Typically, I make comics in one of two ways: I draw and write something quickly in a diary or I spend months scripting, writing and rewriting, drawing and erasing, scanning and editing a half dozen pages. Making comics is a laborious process, especially for single-creator comics. In this “auteur” model of making comics, the person who writes the comic also illustrates it. Stemming from underground comix (transgressive comics that did not meet the approval of the Comics Code Authority) and alternative comics (alternative to, say, mainstream Marvel and DC comics), these hand-drawn comics reflect the unique, developed style of their creator. From the beautiful brushwork of Craig Thompson to John Porcellino’s clean lines and distilled images, a comic creator’s panels become as recognizable as a writer’s voice or an artist’s style.

Cartoonist Lynda Barry famously champions the beauty of the hand-drawn line and the individuality of its expression. Even if you are “bad at drawing,” you make an image unique to you; Barry would say your drawing is more “alive” than the drawing of a professional draftsman. There is, she contends, something personal and real about an inexact representational drawing. Even the physical appearance of the text becomes another method of communication, a kind of image, because handwriting carries with it an innate expression of personality and individuality, offering another layer of tone and meaning.

Some of DALL-E 2’s drawings have some of this “living” quality. After I uploaded one of my own sketches of a mischievous cat in a witch’s hat, DALL-E 2 made four variations that, to me, looked like they could be “inhabited” characters. But it’s hard to consistently replicate these qualities with a new prompt (to be fair, this is also a challenge for me).

A full collaboration between two AIs to “generate” a comic is possible now that OpenAI has launched ChatGPT and InstructGPT, which generates writing when prompted. But comics rely on a poetic precision of line — there’s not much space for expository text, and I like to avoid having the words duplicate the work the images are doing.

Describing the image I wanted the AI to deliver took a different kind of finesse and revision that I was accustomed to. The prompt box reminded me that longer, more specific descriptions will give better results. Therefore the writing work is doubled, scripting the comic and describing desired possible illustrations. “Chalk pastel drawing of a night sky with a lot of stars” proved a disappointment but generated a new idea: “Chalk pastel drawing of a Kentucky horse farm at night with a lot of stars in the sky.” Was I training the AI? Was the AI training me? Or did we engage in an iterative process as collaborators? I ended the experiment, wondering what it might take to make a full collaboration in which the images complemented the writing and felt expressive, “alive,” rather than soulless.